Introduction to Convolutional

Neural Networks

Introduction

The forward neural network model can be extended using constraints of specific applications. One extension is the Convolutional Neural Network (CNN) - that uses only local connections and shared weights. Translation invariance is a property that results from those constraints, what is very useful on image and signal processing. For instance the CNN can be used for postal code recognition [LeCun 1990] and phoneme recognition [Waibel 1989].

This article shows the elements of CNN, and a basic application in image processing - edge detection (just like a "hello world" application for CNN).

Translation Invariance

Edge Detection

Edge detection is the process to find the limit between the dark and bright pixels in an image. There are many approaches to this problems. Here we present a neural network learning approach, giving to the neural network a sample of input and output images desired:

| Input Image |

|

| Output Image (edge detection) |

|

A full connected neural network is not a good approach because the number of connections is too big, and it is hard coded to only one image size. At the learning stage, we should present the same image with shifts otherwise the edge detection would happen only in one position (what was useless).

Exploring properties of this application we assume:

- Translation invariance

- Local information processing

The edge detection should work the same way anywhere the input image is placed. This class of problem is called Translation Invariant Problem.

The translation invariant property leads to the question: why to create a full connected neural network? There is no need to have full connections because we always work with finite images. The farther the connection, the less importance to the computation.

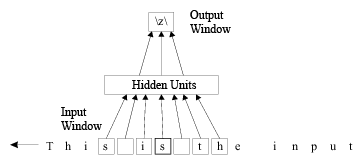

Shift Windows

If the problem requires only local information, we can create an input/output window, applying the same mapping function. This window can shift over the input/output data resulting in a translation invariant processor.

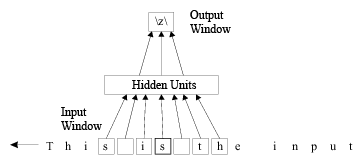

For instance the NetTalk [Sejnowski 1987] computes the right spell from a sequence of letters. There is no need to know the whole phrase to know the right spell of "s". Only the nearest letters are required to this computation:

|

|

Figure: NetTalk

|

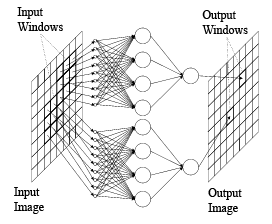

Image Processing

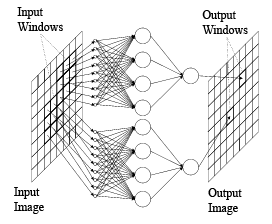

On image processing, we can apply a bi dimensional window, shifting in two dimensions. See [Spreeuwers 1993].

|

|

Figure: Image Shift Window

|

Note that this approach uses hidden units that are not shared while the window shifts. It is un biological and maybe a waste of resources.

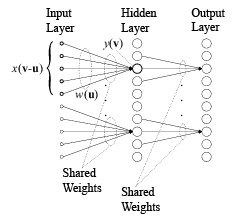

Shared Weights Neural Network

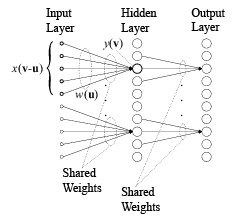

Hidden units can have shift windows too:

|

|

Shared Hidden Units

|

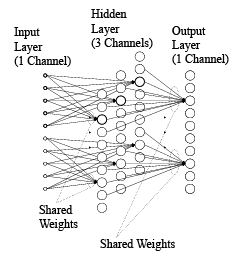

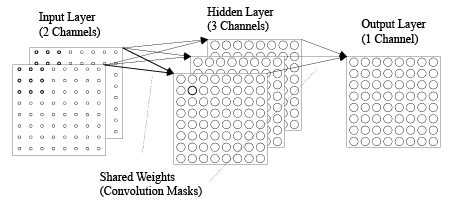

This approach results in a hidden unit that is translation invariant. But now this layer recognizes only one translation invariant feature, what can make the output layer unable to detect some desired feature. To fix this problem, we can add multiple translation invariant hidden layers:

|

|

Shared Weight Neural Networks

|

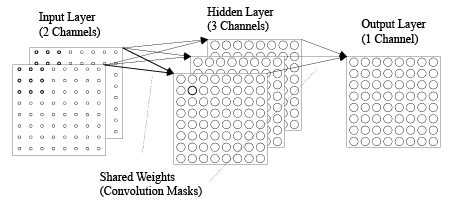

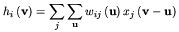

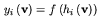

This model is know as Shared Weight Neural Networks (SWNN), also know as Convolutional Neural Network. The signal propagation is:

and

Where y is the neuron activation of a layer; w are the shared weights; and x is the neuron activation of the previous layer.

This model was presented by several articles:

- [Rumelhart 1986] - "T-C problem";

- [Waibel 1989] - "Time-Delay Neural Networks" (TDNN);

- [Wilson 1989], [Wilson 1992] - "Iconic Neural Networks";

- [LeCun 1990], [LeCun 1995] - "Convolutional Neural Networks".

Convolutional Neural Network

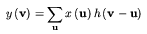

The Shared Weight Neural Network can be rewritten with the convolution operation. The convolution operation can be calculated with the Fast Fourier Transform (FFT), what improves performance (see [Dudgeon 1984] for multi dimensional FFT).

Convolution is defined as:

It is usual to represent this operation with a "*" symbol:

This way, the Shared Weight Neural Network propagation can be rewritten as:

and

This results in the model:

|

|

Convolutional Neural Network (CNN)

|

Note that each set of shared weights correspond to a convolution mask.

Learning Algorithm

The Convolutional Neural Network has less connections and the constraint of shared weights what reduces the solution space.

The back propagation learning algorithm can be used to train the CNN with some few adaptations. This network can be seen as a full connected neural network, but the non local connections are null. The retro propagation error and the update factor must be averaged over the translation, resulting in a set of update weights for the shared weights (see [Rumelhart 1986] and [Waibel 1989]).

Convolutional Neural Network for Edge Detection

CNN with the input/output training images:

| Input Image (Training) |

|

| Output Image (Training) |

|

| Output Results After Training |

|

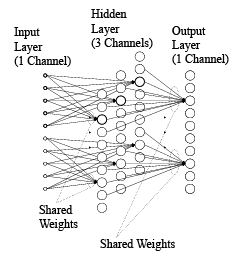

Layers activations:

Input layer

(1 Channel)

|

Hidden Layer

(3 Channels) |

Output Layer

(1 Channel) |

|

|

|

|

The hidden layers activate for partial edge detection, somehow just like real neurons described in Eye, Brain and Vision (EBV) from David Hubel. Probably there is not "shared weights" in brains neurons, but something very near should be achieved with the presentation of patterns shifting along our field of view.

Source Code

The Convolutional Neural Network was implemented with Matlab 5.3 r11 . The source code is free for academic use, but there are no warranties or support. Run the edge detection example typing:

» cd rnc/edge

» main

For playback, type :

» history_activation('history');

Bibliography

[Dudgeon 1984] Dudgeon, Dan E.; Mersereau, Russel M.; 1984. Multidimensional

Digital Signal Processing . Englewood Cliffs, NJ : Prentice-Hall.

[Hubel 1995] Hubel, David H.; 1995. Eye Brain and Vision . New York : Scientific

American Library.

[LeCun 1990] LeCun, Y.; Boser, B.; Denker, J. S. et al.; 1990. Handwritten

Digit Recognition with a Back-Propagation Network. In David Touretzky. Advances

in Neural Information Processing Systems 2 (NIPS *89) . Denver : Morgan Kaufman.

[LeCun 1995] LeCun, Y.; Bengio, Y.; 1995. Convolutional networks for images,

speech, and time-series. In Arbib, M. A. The Handbook of Brain Theory and

Neural Networks. MIT Press.

[Rumelhart 1986] Rumelhart, David E.; Hinton, G. E.; Williams, R. J.; 1986.

Learning Internal Representations by Error Propagation. In Parallel Distributed

Processing , Cambridge : M. I. T. Press, v. 1, p. 318-362.

[Sejnowski 1987] Sejnowski, T. J.; Rosenberg, C. R.; 1987. Parallel Networks

that Learn to Pronounce English Text. Complex Systems , v. 1, p. 145-168.

[Spreeuwers 1993] Spreeuwers, Luuk; 1993. Image Filtering with Neural Networks.

In: SMBT Meeting on Measurement and Artificial Neural Networks (3. : Nov.

1993 : Utrecht, Netherlands) Proceedings. p. 37-52.

[Waibel 1989] Waibel, Alexander; Hanazawa, Toshiyuki; Hinton, Geoffrey et

al.; 1989. Phoneme Recognition Using Time-Delay Neural Networks. IEEE Transactions

on Acoustics, Speech and Signal Processing , v. ASSP-37, n. 3 (March), p.

328-339.

[Wilson 1989] Wilson, Stephen S.; 1989. Vector Morphology and Iconic Neural

Networks. IEEE Transactions on Systems, Man, and Cybernetics , v.19, n. 6

(November/December), p. 1636-1644.

[Wilson 1992] Wilson, Stephen S.; 1992. Translation Invariant Neural Networks.

In Soucek, Branko. Fast Learning and Invariant Object Recogition : the Sixth-Generation

Breakthroug h . New York : John Wiley & Sons, p. 125-151.

Back to home page